友情链接申请友情链接入口>>

前期:专人对客户需求提供咨询,充分了

解客户需求。针对性提供系统方案,包括

设计和来图打样。

qy球友会官网成立于2008年,工厂坐落于上海松江南部工业区,工厂生产厂房面积3000平方,现有员工57人,设计技术人员7人,工厂主要生产:各种泡棉内包装制品,泡棉背胶成型制品,泡棉消音制品,其母公司上海图梦实业有限公司于2002年成立于上海市闵行区, 是一家专业提供物流包装设计和解决方案的供应商,经过近多年的 市场磨炼和实践,已发展成为集纸箱包装、塑料泡棉缓冲包装设计,生产加工为一体的生产服务型企业。目前公司总部设在上海闵行,上海松江、奉贤、金山都设有生产工厂和配送基地。

点击详情外观设计、材料选择、规格大小,按需定制,固尔亿全力解决

挑选优良原材料,严格流程把关质检,确保每个产品的质量

专业生产流水线,上千家客户案例,可承接加急订单

多年经验包装人才,自有货车发货,保障随时交货

专业客服在线售后,快速响应,为您提供贴心的服务

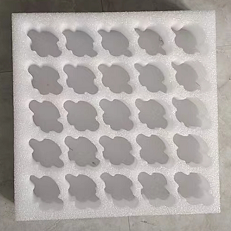

珍珠泡棉鸡蛋托成套包装qy球友会官网鸡蛋托275*275*70成型;孔深60,25孔 板 下面贴10珍珠棉板配套纸箱290*290*170一个纸箱 ......

查看详情+